PyTorch - FashionMNIST + LeNet-5

创始人

2025-05-30 01:57:05

文章目录

- 项目说明

- 数据集说明 - FashionMNIST

- 算法说明 - LeNet-5

- LeNet-5 网络结构

- 代码实现

- 数据准备

- 下载数据集

- 查看数据

- 定义网络

- 训练

- 设置参数

- 训练方法

- 验证方法

项目说明

参考

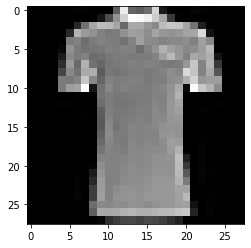

数据集说明 - FashionMNIST

- Fashion-MNIST 由10个类别的图像组成,每个类别由训练数据集(train dataset)中的6000张图像 和测试数据集(test dataset)中的1000张图像组成。

- 图像是一个 28*28 的灰度图像。

- 十个类别是:t-shirt, trouser, pillover, dress, coat, sandal, shirt, sneaker, bag, ankle boot

算法说明 - LeNet-5

这里 使用 LeNet-5 来实现 Fashion-MNIST 服装图⽚的分类任务。

LeNet 是最早发布的卷积神经网络之一,是由AT&T贝尔实验室的研究员Yann LeCun在1989年提出的(并以其命名),目的是识别图像中的手写数字。

当时,Yann LeCun发表了第一篇通过反向传播成功训练卷积神经网络的研究,这项工作代表了十多年来神经网络研究开发的成果。

LeNet取得了与支持向量机(support vector machines)性能相媲美的成果,成为监督学习的主流方法。 LeNet被广泛用于自动取款机(ATM)机中,帮助识别处理支票的数字。 时至今日,一些自动取款机仍在运行Yann LeCun和他的同事Leon Bottou在上世纪90年代写的代码。

相关资料

- LeNet-5 - A Classic CNN Architecture

https://www.datasciencecentral.com/lenet-5-a-classic-cnn-architecture/ - D2L : 《6.6. 卷积神经网络(LeNet)》

https://zh.d2l.ai/chapter_convolutional-neural-networks/lenet.html

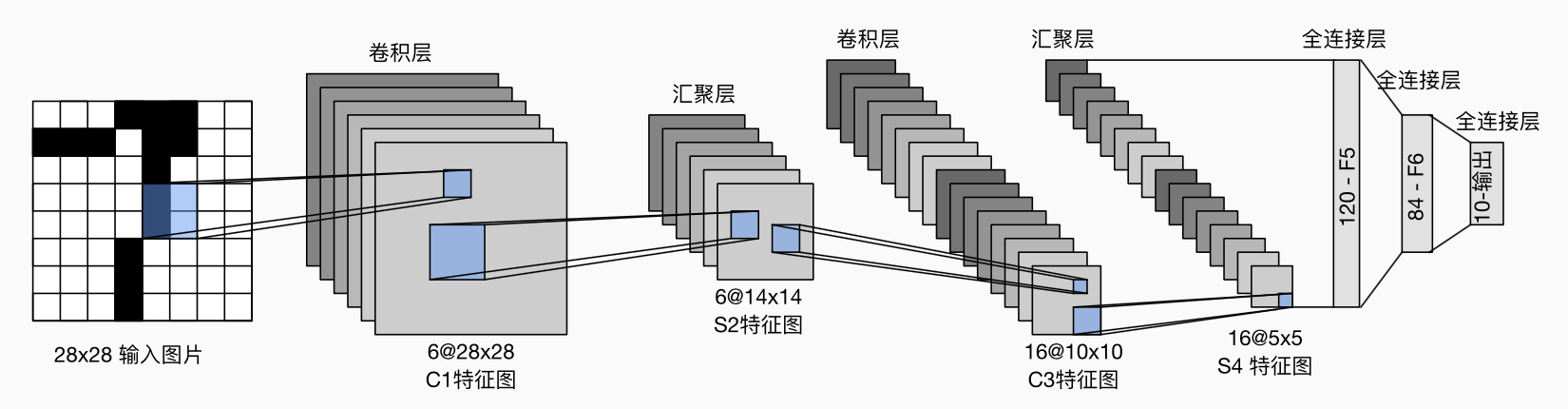

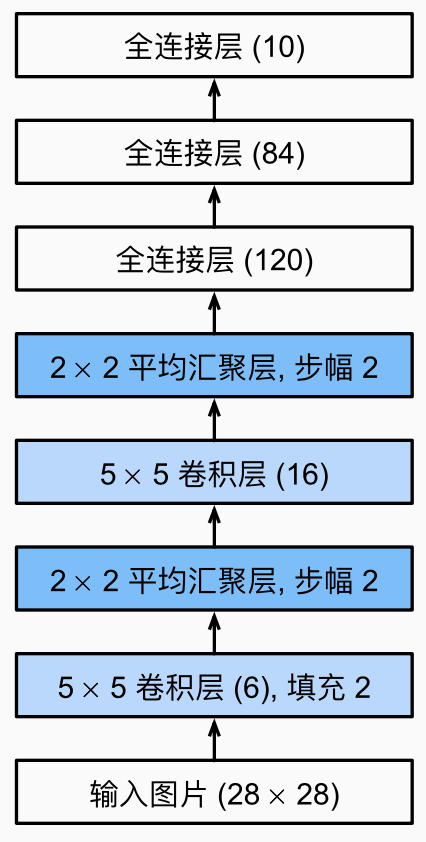

LeNet-5 网络结构

总体来看,LeNet-5 由两个部分组成:

- 卷积编码器:由两个卷积层组成;

- 全连接层密集块:由三个全连接层组成。

下图以输入尺寸为 28*28 单通道的图片,输出10分类为例:

说明:

- 每个卷积块中的基本单元是⼀个卷积层、⼀个 sigmoid 激活函数 和 平均汇聚层。

- 每个卷积层使⽤ 5 × 5 卷积核和⼀个 sigmoid 激活函数。

- 第⼀卷积层有 6 个输出通道,第⼆个卷积层有16个输出通道。

- 每个 2 ×2 的池操作(步幅2)通过空间下采样将维数减少4倍。

- 卷积的输出形状由批量⼤⼩、通道数、⾼度、宽度决定。

- 为了将卷积块的输出传递给稠密块,我们必须在⼩批量中展平每个样本。

换⾔之,我们将这个四维输⼊转换成全连接层所期望的⼆维输⼊。

这⾥的⼆维表⽰的第⼀个维度索引⼩批量中的样本,第⼆个维度给出每个样本的平⾯向量表⽰。 - LeNet-5 的稠密块有三个全连接层,分别有120、84和10个输出。

因为我们在执⾏分类任务,所以输出层的10维对应于最后输出结果的数量。

代码实现

引入框架

import os

import numpy as np

import pandas as pd

import torch

import torch.nn as nn

import torch.optim as optim

from torch.utils.data import Dataset, DataLoader

from torchvision import transforms

from torchvision import datasets

import matplotlib.pyplot as plt数据准备

下载数据集

data_transform = transforms.Compose([#transforms.ToPILImage(), # 这一步取决于后续的数据读取方式,如果使用内置数据集读取方式则不需要transforms.Resize(image_size),transforms.ToTensor()])train_data = datasets.FashionMNIST(root=task_dir, train=True, download=True, transform=data_transform)

test_data = datasets.FashionMNIST(root=task_dir, train=False, download=True, transform=data_transform)train_loader = DataLoader(train_data, batch_size=batch_size, shuffle=True, num_workers=num_workers, drop_last=True)

test_loader = DataLoader(test_data, batch_size=batch_size, shuffle=False, num_workers=num_workers)

查看数据

print(len(mnist_test))

print(len(mnist_train))mnist_train[0][0].shape # 取出第一张图片

# torch.Size([1, 28, 28]) # 黑白图片 28 * 28image, label = next(iter(train_loader))

print(image.shape, label.shape)

plt.imshow(image[0][0], cmap="gray")

定义网络

class Net(nn.Module):def __init__(self):super(Net, self).__init__()self.conv = nn.Sequential(nn.Conv2d(1, 32, 5),nn.ReLU(),nn.MaxPool2d(2, stride=2),nn.Dropout(0.3),nn.Conv2d(32, 64, 5),nn.ReLU(),nn.MaxPool2d(2, stride=2),nn.Dropout(0.3))self.fc = nn.Sequential(nn.Linear(64*4*4, 512),nn.ReLU(),nn.Linear(512, 10))def forward(self, x):x = self.conv(x)x = x.view(-1, 64*4*4)x = self.fc(x)x = nn.functional.normalize(x)return x训练

设置参数

device = "cuda:0" if torch.cuda.is_available() else "cpu"batch_size = 256

num_workers = 0 # 对于Windows用户,这里应设置为0,否则会出现多线程错误

lr = 1e-4

epochs = 4

image_size = 28model = Net()

print(model.to(device))

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=0.001)

# model

Net((conv): Sequential((0): Conv2d(1, 32, kernel_size=(5, 5), stride=(1, 1))(1): ReLU()(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)(3): Dropout(p=0.3, inplace=False)(4): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1))(5): ReLU()(6): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)(7): Dropout(p=0.3, inplace=False))(fc): Sequential((0): Linear(in_features=1024, out_features=512, bias=True)(1): ReLU()(2): Linear(in_features=512, out_features=10, bias=True))

)

训练方法

def train(epoch):model.train()train_loss = 0for data, label in train_loader:#data, label = data.cuda(), label.cuda()optimizer.zero_grad()output = model(data)loss = criterion(output, label)loss.backward()optimizer.step()train_loss += loss.item()*data.size(0)train_loss = train_loss/len(train_loader.dataset)print('Epoch: {} \tTraining Loss: {:.6f}'.format(epoch, train_loss))

验证方法

def val(epoch): model.eval()val_loss = 0gt_labels = []pred_labels = []with torch.no_grad():for data, label in test_loader:#data, label = data.cuda(), label.cuda()output = model(data)preds = torch.argmax(output, 1)gt_labels.append(label.cpu().data.numpy())pred_labels.append(preds.cpu().data.numpy())loss = criterion(output, label)val_loss += loss.item()*data.size(0)val_loss = val_loss/len(test_loader.dataset)gt_labels, pred_labels = np.concatenate(gt_labels), np.concatenate(pred_labels)acc = np.sum(gt_labels==pred_labels)/len(pred_labels)print('Epoch: {} \tValidation Loss: {:.6f}, Accuracy: {:6f}'.format(epoch, val_loss, acc))

执行训练

for epoch in range(1, epochs+1):train(epoch)val(epoch)

相关内容

热门资讯

2026中国(云南)新春购物节...

中新网昆明2月13日电 (记者 缪超)记者13日从云南省商务厅获悉,“彩云好礼 马上有福”2026中...

原创 中...

随着我国发展的越来越好,很多外国人被吸引,不远万里来到我们中国旅游,很多老外来到之后,才深切感受到中...

2月份桂林春节自驾游推荐

引言 春节期间,桂林以其独特的山水风光和丰富的文化底蕴吸引了众多游客。如果您计划在2月份进行一次难忘...

海晏县旅游景点必玩推荐

在青海湖之畔,有一座宁静而美丽的小城——海晏县。这里有着广袤的草原、湛蓝的天空、神秘的文化遗址,宛如...